Last June I was in Madrid for TechEd Conference. The main focus was The Cloud. Microsoft has actually done a really good job and the platform is very mature. I’m not going to lie by saying that I will prefer to host everything in the cloud than doing it on-premise. A few PowerShell scripts and voila you got yourself the desired environments. And with the instance slider, you got yourself the amount of instances that you could need for a specific period. Try to do something similar with your on-premises infrastructure. Another awesome feature is that from now on you will only pay for the environments if they are running. This means that DEV and TEST can be shut down while they are not being used:

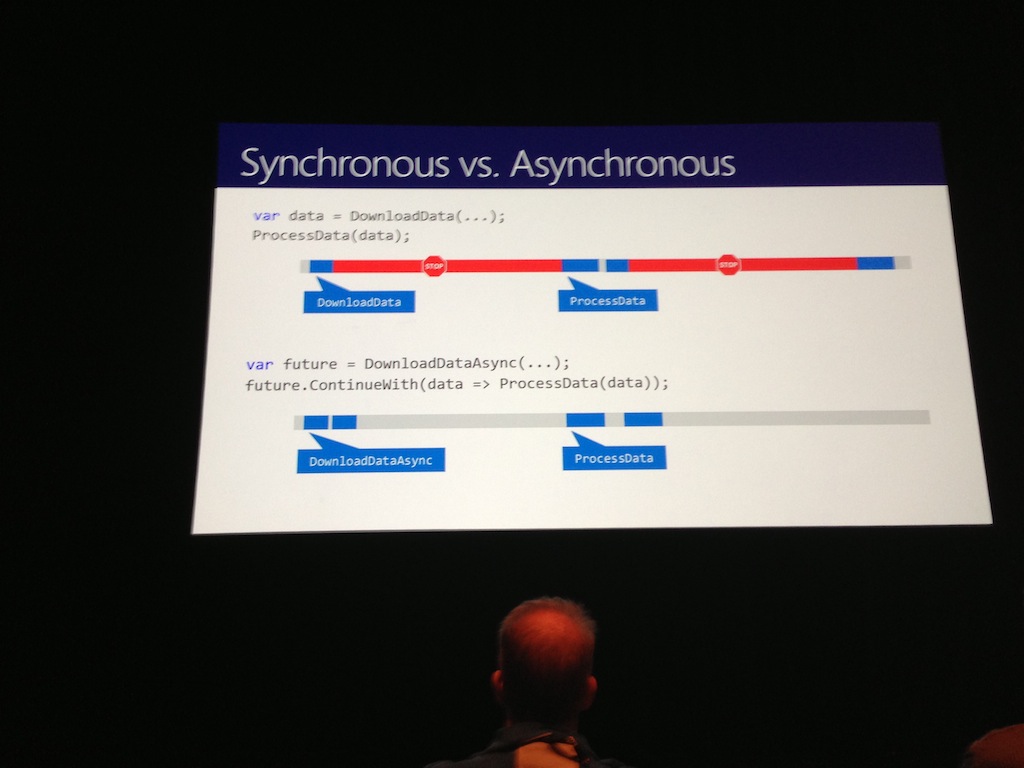

Lucian Wischik gave three talks with regards to async arriving to C# 5.0 (no callbacks needed). Hmmmm, I wonder were we have seen this before, who said F#?

Another really interesting talk was David Starr regarding Brownfield Development. We all have seen this huge amount of spaghetti code right?

But how do we actually ensure that we don’t get to this point? And how do we avoid that methods grow to become huge? I think the main problem is because we use a toolbox that actually allows this to happen, mostly cos it’s part of it’s verbosity …

… well the answer isn’t that difficult. Even though Dustin Campbell gave a good talk, Microsoft really needs to understand that they are not going to catch the businessmen attention by showing a how F# is really good to solve Project Euler problems. What Microsoft needs to do, is to show on one of their platforms how using F# provides a more clean and robust way to make quality software, and we might able to help out on this one, stay tuned:

Finally, not everything in Madrid had to be hard work, there were also time to some pleasure:

As it has been a while since I went to TechEd and because I have to give a small talk for the rest of Delegate A/S employees, I needed to get the PowerPoints and some videos. I was a bit bored and cos I love F# I decided to make a small file crawler. Things I noticed while creating the app is how simple it is to convert from a sequential to parallel app. Just convert the sequence to an array and then apply parallelism, as simple as that. The only issue I found while converting the app to run in parallel is that printfn is not thread-safe so a few changes to Console.WriteLine and sprintf and voila, it’s 100% parallel. This is one of the strong sides of F#, like any other .NET language, it has access to the whole Frameworks API.

namespace Stermon.Tools.TechEdFileCrawler

open System

open System.IO

open System.Net

open System.Text.RegularExpressions

open FSharp.Net

type TechEdFileCrawlerLib() =

member private this.absoluteUrl url href =

WebUtility.HtmlDecode(Uri(url).GetLeftPart(UriPartial.Authority) + href)

member private this.ensureUrl url (match':string) (tags:string) =

match'

|> fun s -> s.Replace(@"href=""", String.Empty)

|> fun s ->

let rec cleanTags (s':string) tags' =

match tags' with

| x::xs -> cleanTags (s'.Replace(@""">" + x, String.Empty)) xs

| [] -> s'

cleanTags s (tags.Split('|') |> Array.toList)

|> fun s ->

match s.StartsWith("http") with

| false -> this.absoluteUrl url s

| true -> s

member this.Crawl url (tags:string) =

let tags' =

tags.Split('|')

|> Array.map(fun x -> @"href.*" + x)

|> Array.reduce(fun x y -> x + "|" + y)

let html = Http.Request(url)

let m = Regex.Match(html, tags')

let rec hrefs (m:Match) = seq{

match (m.Success) with

| true ->

yield! [for g in m.Groups -> this.ensureUrl url g.Value tags]

yield! hrefs (m.NextMatch())

| false -> ()

}

hrefs m

member this.PagesRec url tag =

let rec pages acc sq = seq{

match (sq |> Seq.toList) with

| x::xs -> yield! pages (Seq.append acc sq) (this.Crawl x tag)

| [] -> yield url; yield! acc

}

pages Seq.empty (this.Crawl url tag)

member this.Download href =

try

match Http.RequestDetailed(href).Body with

| HttpResponseBody.Binary bytes ->

let name (href':string) =

href'

|> fun s -> s.Replace(@"http://", String.Empty)

|> fun s -> s.Replace(@"/", "_")

match (Directory.Exists(@".\techEd")) with

| false -> Directory.CreateDirectory(@".\techEd") |> ignore

| true -> ()

File.WriteAllBytes(@".\techEd\" + (name href), bytes)

Console.WriteLine(sprintf "%O" (@"-Saved: " + href))

| _ -> ()

with

| exn ->

let en = Environment.NewLine

Console.WriteLine(

sprintf @"-An exception occurred:%s >>%s %s >>%s"

en exn.Message en href)Remark: There is no need to actually change the algorithm, so it is still as readable as it was before. Like stated before, change three lines and voila, the app runs in parallel …

open System

open Stermon.Tools.TechEdFileCrawler

let getArg argv key =

let arg = Array.tryFind(fun (a:string) -> a.StartsWith(key)) argv

match arg with

| Some x -> x.Replace(key, String.Empty)

| None -> failwith ("Missing argument: " + key)

[<EntryPoint>]

let main argv =

try

let tec = TechEdFileCrawlerLib()

tec.PagesRec (getArg argv "url=") (getArg argv "next=")

// |> Seq.iter(fun x ->

// tec.Crawl x (getArg argv "tags=")

// |> Seq.iter(fun x -> tec.Download x))

|> Seq.toArray

|> Array.Parallel.iter(fun x ->

tec.Crawl x (getArg argv "tags=")

|> Seq.toArray

|> Array.Parallel.iter(fun x -> tec.Download x))

with

| exn ->

let iexn =

match (exn.InnerException) with

| null -> "No InnerException."

| iexn' -> iexn'.Message

printfn "An exception occurred:\n -%s\n -%s" exn.Message iexn

0The crawler is called from a terminal like this:

TechEdFileCrawler.exe ^

"url=http://channel9.msdn.com/Events/TechEd/Europe/2013?sort=status" ^

"next=next" ^

"tags=Slides|Zip"And will save the files and write the following output:

...

-Saved: http://video.ch9.ms/sessions/teched/eu/2013/ATC-B210.pptx

-Saved: http://video.ch9.ms/sessions/teched/eu/2013/OUC-B306.pptx

-Saved: http://video.ch9.ms/sessions/teched/eu/2013/DEV-IL201.zip

-Saved: http://video.ch9.ms/sessions/teched/eu/2013/OUC-B302.pptx

-Saved: http://video.ch9.ms/sessions/teched/eu/2013/WCA-B208.pptx

-Saved: http://video.ch9.ms/sessions/teched/eu/2013/ATC-B204.pptx

-An exception occurred:

>>The remote server returned an error: (404) Not Found.

>>http://video.ch9.ms/sessions/teched/eu/2013/WPH-H201.zip

-An exception occurred:

>>The remote server returned an error: (404) Not Found.

>>http://video.ch9.ms/sessions/teched/eu/2013/WPH-H202.zip

-Saved: http://video.ch9.ms/sessions/teched/eu/2013/SES-H201.zip

-An exception occurred:

>>The remote server returned an error: (404) Not Found.

>>http://video.ch9.ms/sessions/teched/eu/2013/WPH-H203.zip

-Saved: http://video.ch9.ms/sessions/teched/eu/2013/DBI-H212.zip

-An exception occurred:

>>The remote server returned an error: (404) Not Found.

>>http://video.ch9.ms/sessions/teched/eu/2013/WPH-H206.zip

-Saved: http://video.ch9.ms/sessions/teched/eu/2013/ATC-B214.pptx

-Saved: http://video.ch9.ms/sessions/teched/eu/2013/WAD-B291.pptx

-Saved: http://video.ch9.ms/sessions/teched/eu/2013/SES-H204.zip

-Saved: http://video.ch9.ms/sessions/teched/eu/2013/DBI-H213.zip

-An exception occurred:

>>The remote server returned an error: (404) Not Found.

>>http://video.ch9.ms/sessions/teched/eu/2013/WPH-H204.zip

-Saved: http://video.ch9.ms/sessions/teched/eu/2013/SES-H202.zip

-Saved: http://video.ch9.ms/sessions/teched/eu/2013/OUC-B307.pptx

...p.s.: It wouldn’t be that difficult to convert the code above to a generic website file crawler …